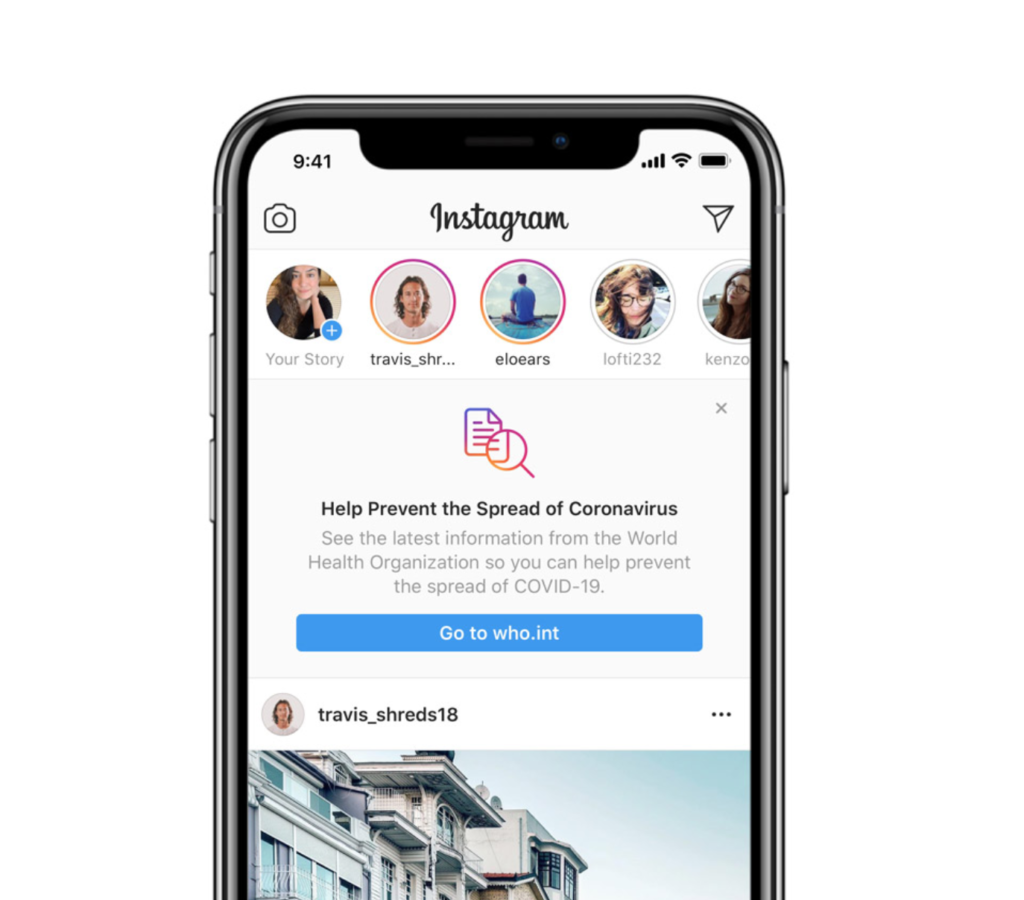

It was March 24, early in the pandemic when Instagram updated its user regulations to facilitate more accurate COVID-19 information being shared on the platform. In a post titled ‘Keeping People Informed, Safe, and Supported on Instagram,’ the company announced that when people search for coronavirus content they will be presented with a message directing them to the WHO for accurate information.

Further, Instagram noted that “if posts are rated false by third-party fact-checkers, we remove them from Explore and hashtag pages. We also remove false claims or conspiracy theories that have been flagged by leading global health organizations and local health authorities as having the potential to cause harm to people who believe them,” according to the blog post.

It is clear that Instagram tried to address misinformation early in the pandemic. And it had good reason to, after learning hard lessons during the 2016 election. Owned by Facebook, Instagram was specifically called out for the significant role it played in spreading misinformation in a Senate Intelligence Committee report looking into Russian hacking efforts. It was named as “the most effective tool used by the IRA [Russia’s Internet Research Agency] to conduct its information operations campaign.”

Late last year, Instagram made further updates to its platform to ‘promote credible information.’ A blog post dated December 16, 2019, highlights its intention to expand its fact-checking program. “When content has been rated as false or partly false by a third-party fact-checker, we reduce its distribution by removing it from Explore and hashtag pages, and reducing its visibility in Feed and Stories,” the Instagram blog post reads. “In addition, it will be labeled so people can better decide for themselves what to read, trust, and share. When these labels are applied, they will appear to everyone around the world viewing that content – in feed, profile, stories, and direct messages.”

Instagram confirms that it uses image matching technology to find suspect content, as well as duplicates of content deemed false or partly false on Facebook. Links to credible articles debunking the claims can be added to the false Instagram posts, and they will be removed from Explore and hashtag pages. The Instagram help page notes that the company works with 45 third-party fact-checkers across the globe who are “certified through the non-partisan International Fact-Checking Network to help identify, review and label false information.” That page also states that while it is committed to giving false information warnings, “the original content of politicians is not sent to third-party fact checkers for review,” and refers users to parent company Facebook’s political speech announcement in September 2019 for further information.

The Facebook page that Instagram links to contains a transcript from a speech Nick Clegg, Facebook’s VP of Global Affairs and Communications, gave in Washington DC last year, outlining the reasons that Instagram does not fact-check politicians. “We don’t believe, however, that it’s an appropriate role for us to referee political debates and prevent a politician’s speech from reaching its audience and being subject to public debate and scrutiny,” Clegg said. He also addressed concern over deepfakes in that speech, confirming the company launched a ‘Deepfake Detection Challenge’ in collaboration with the Partnership on AI, Microsoft, MIT, Berkeley, and Oxford. That initiative aims so identify manipulated ‘deep fake’ content and take action, though Clegg didn’t specify what action Facebook will take.

Yesterday Facebook updated its policies again, announcing it would not run ‘social issue, electoral, or political ads’ from election day onwards. The decision came as a surprise, as Facebook has previously held a hard line on allowing those kinds of ads on its platforms. What is still not known is when that ban will be lifted. In its blogpost, Facebook notes that it “will notify advertisers when this policy is lifted.” The company has also put practices in place to prepare for the instance that a candidate declares victory prematurely. “When polls close, we will run a notification at the top of Facebook and Instagram and apply labels to candidates’ posts directing people to the Voting Information Center for more information about the vote-counting process,” the Facebook blogpost reads. “But, if a candidate or party declares premature victory before a race is called by major media outlets, we will add more specific information in the notifications that counting is still in progress and no winner has been determined.”